What is the difference between Kmeans and em?

EM and K-means are similar in the sense that they allow model refining of an iterative process to find the best congestion. However, the K-means algorithm differs in the method used for calculating the Euclidean distance while calculating the distance between each of two data items; and EM uses statistical methods.

- Q. Why Kmeans is not recommended?

- Q. Is hierarchical clustering better than Kmeans?

- Q. Is em a clustering algorithm?

- Q. Is K-means a special case of em?

- Q. What is EM in machine learning?

- Q. What are the disadvantages of clustering?

- Q. What are the major drawbacks of K-Means clustering?

- Q. What is the advantage of hierarchical clustering?

- Q. Is K means an example of hierarchical clustering?

- Q. What are the steps of EM algorithm?

- Q. How do you do EM algorithms?

- Q. How are clusters clustered with k-means and Em?

- Q. How does the kmeans algorithm for clustering work?

- Q. Which is better em or k-means in GMM?

- Q. How are k-means and Em related in machine learning?

Q. Why Kmeans is not recommended?

k-means assume the variance of the distribution of each attribute (variable) is spherical; all variables have the same variance; the prior probability for all k clusters are the same, i.e. each cluster has roughly equal number of observations; If any one of these 3 assumptions is violated, then k-means will fail.

Q. Is hierarchical clustering better than Kmeans?

Hierarchical clustering can’t handle big data well but K Means clustering can. This is because the time complexity of K Means is linear i.e. O(n) while that of hierarchical clustering is quadratic i.e. O(n2).

Q. Is em a clustering algorithm?

Expectation Maximization (EM) is another popular, though a bit more complicated, clustering algorithm that relies on maximizing the likelihood to find the statistical parameters of the underlying sub-populations in the dataset.

Q. Is K-means a special case of em?

K-means clustering is a special case of hard EM. We can define a K-means probability model as follows where N(µ, I) denotes the D-dimensional Gaussian distribution with mean µ ∈ RD and with the identity covariance matrix.

Q. What is EM in machine learning?

The Expectation-Maximization Algorithm, or EM algorithm for short, is an approach for maximum likelihood estimation in the presence of latent variables. A general technique for finding maximum likelihood estimators in latent variable models is the expectation-maximization (EM) algorithm.

Q. What are the disadvantages of clustering?

Disadvantages of clustering are complexity and inability to recover from database corruption. In a clustered environment, the cluster uses the same IP address for Directory Server and Directory Proxy Server, regardless of which cluster node is actually running the service.

Q. What are the major drawbacks of K-Means clustering?

The most important limitations of Simple k-means are: The user has to specify k (the number of clusters) in the beginning. k-means can only handle numerical data. k-means assumes that we deal with spherical clusters and that each cluster has roughly equal numbers of observations.

Q. What is the advantage of hierarchical clustering?

The advantage of hierarchical clustering is that it is easy to understand and implement. The dendrogram output of the algorithm can be used to understand the big picture as well as the groups in your data.

Q. Is K means an example of hierarchical clustering?

A hierarchical clustering is a set of nested clusters that are arranged as a tree. K Means clustering is found to work well when the structure of the clusters is hyper spherical (like circle in 2D, sphere in 3D). Hierarchical clustering don’t work as well as, k means when the shape of the clusters is hyper spherical.

Q. What are the steps of EM algorithm?

The EM algorithm is an iterative approach that cycles between two modes. The first mode attempts to estimate the missing or latent variables, called the estimation-step or E-step. The second mode attempts to optimize the parameters of the model to best explain the data, called the maximization-step or M-step.

Q. How do you do EM algorithms?

In summary, the two steps of the EM algorithm are:

- E-step: perform probabilistic assignments of each data point to some class based on the current hypothesis h for the distributional class parameters;

- M-step: update the hypothesis h for the distributional class parameters based on the new data assignments.

Q. How are clusters clustered with k-means and Em?

k-means “assumes” that the clusters are more or less round and solid (not heavily elongated or curved or just ringed) clouds in euclidean space. They are not required to come from normal distributions. EM does require it (or at least specific type of distribution to be known). – ttnphns Nov 18 ’13 at 12:21

Q. How does the kmeans algorithm for clustering work?

The way kmeans algorithm works is as follows: 1 Specify number of clusters K. 2 Initialize centroids by first shuffling the dataset and then randomly selecting K data points for the centroids without replacement. 3 Keep iterating until there is no change to the centroids. i.e assignment of data points to clusters isn’t changing.

Q. Which is better em or k-means in GMM?

Here summarized process and properties of k-means on the left-hand side and Expectation Maximization (EM) on the opposite. Note that EM is not a clustering algorithm but a way to get Maximized Likelihood solution of the Gaussian mixture model (GMM).

Q. How are k-means and Em related in machine learning?

I keep reading the following : k-means is a variant of EM, with the assumptions that clusters are spherical. Can somebody explain the above sentence? I do not understand what spherical means, and how kmeans and EM are related, since one does probabilistic assignment and the other does it in a deterministic way.

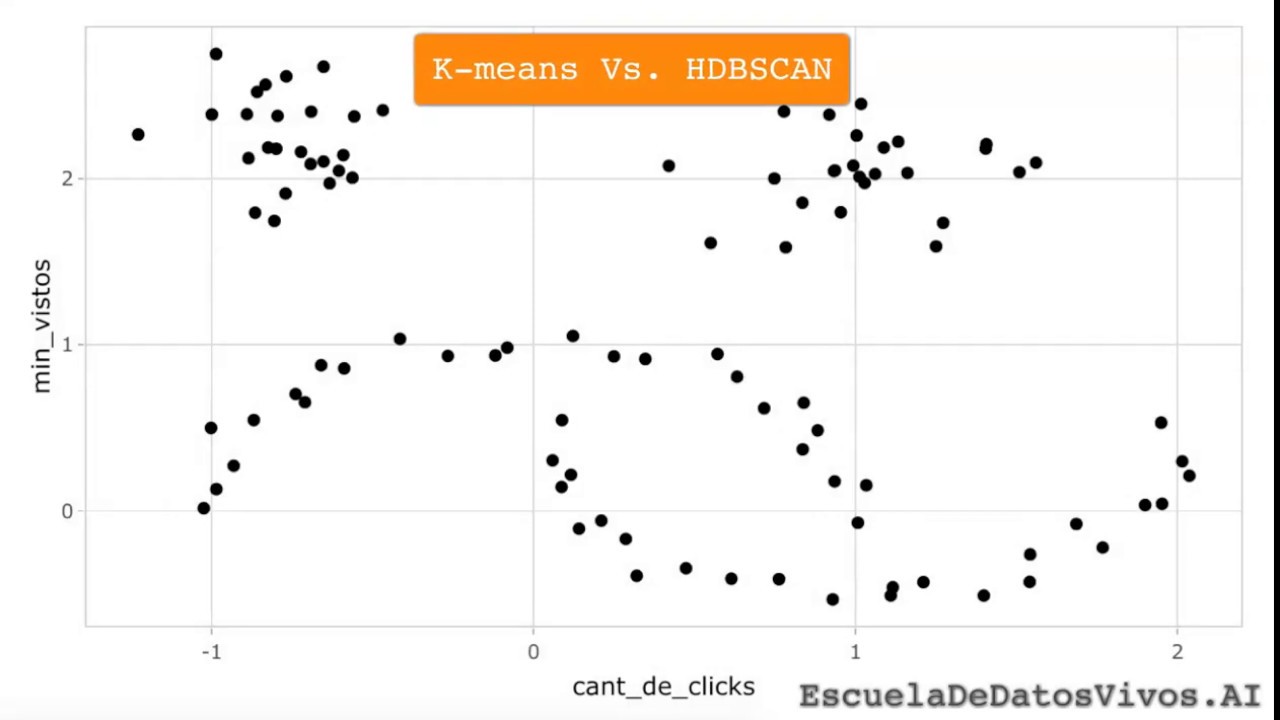

Un pequeño video demo sobre clustering, y la diferencia entre k-means y HDBSCAN (con bonus de detección outliers! 🕵️♂️)📝 Dentro del tema clustering: – ¿Qu…

No Comments