How do I transfer a HDFS file to AWS S3 bucket?

Re: How to copy HDFS file to AWS S3 Bucket? hadoop distcp is not working.

- Q. How do I copy files to AWS S3 bucket?

- Q. How do I import data into an EMR cluster?

- Q. What is S3 Dist CP?

- Q. How do I transfer files from S3 bucket to S3 bucket?

- Q. How to access Amazon S3 bucket using Hadoop command?

- Q. How are Hadoop files transferred to AWS Snowball edge?

- Q. What does multipart upload do in Apache Hadoop?

- Q. Why do we need S3A committers in Apache Hadoop?

- Configure the core-site.xml file with following aws property :

- Export the JAR (aws-java-sdk-1.7.

- The hadoop “cp” command will copy source data (Local Hdfs) to Destination (AWS S3 bucket) .

Q. How do I copy files to AWS S3 bucket?

Steps to copy files from EC2 instance to S3 bucket (Upload)

- Create an IAM role with S3 write access or admin access.

- Map the IAM role to an EC2 instance.

- Install AWS CLI in EC2 instance.

- Run the AWS s3 cp command to copy the files to the S3 bucket.

Q. How do I import data into an EMR cluster?

The most common way is to upload the data to Amazon S3 and use the built-in features of Amazon EMR to load the data onto your cluster. You can also use the DistributedCache feature of Hadoop to transfer files from a distributed file system to the local file system.

Q. What is S3 Dist CP?

S3DistCp is similar to DistCp, but optimized to work with AWS, particularly Amazon S3. You can also use S3DistCp to copy data between Amazon S3 buckets or from HDFS to Amazon S3. S3DistCp is more scalable and efficient for parallel copying large numbers of objects across buckets and across AWS accounts.

Q. How do I transfer files from S3 bucket to S3 bucket?

To copy objects from one S3 bucket to another, follow these steps:

- Create a new S3 bucket.

- Install and configure the AWS Command Line Interface (AWS CLI).

- Copy the objects between the S3 buckets.

- Verify that the objects are copied.

- Update existing API calls to the target bucket name.

Q. How to access Amazon S3 bucket using Hadoop command?

If there any way how to access amazon S3 bucket using Hadoop command without specifying Keys in core-site.xml. I’d prefer to specify Keys in command line.

Q. How are Hadoop files transferred to AWS Snowball edge?

As a best practice, Hadoop file transfers to AWS Snowball Edge use an intermediary staging machine with HDFS mounted to the local file system. Mounting HDFS allows you to interact with it as a local file system. The staging machine is then used for high throughput parallel reads from HDFS and writes to AWS Snowball Edge.

Q. What does multipart upload do in Apache Hadoop?

The key concept to know of is S3’s “Multipart Upload” mechanism. This allows an S3 client to write data to S3 in multiple HTTP POST requests, only completing the write operation with a final POST to complete the upload; this final POST consisting of a short list of the etags of the uploaded blocks.

Q. Why do we need S3A committers in Apache Hadoop?

These committers are designed to solve a fundamental problem which the standard committers of work cannot do to S3: consistent, high performance, and reliable commitment of output to S3. For details on their internal design, see S3A Committers: Architecture and Implementation.

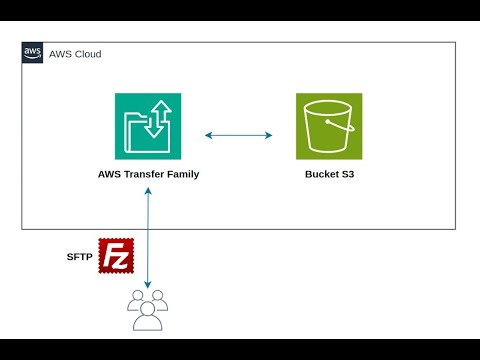

En este tutorial, te mostrare cómo utilizar AWS Transfer Family para transferir tus datos de manera segura y eficiente. Desde configurar el servidor SFTP has…

No Comments